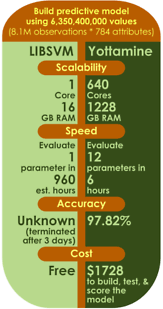

Conventional predictive analytics tools were not made for Big Data, especially when it contains complex, nonlinear behaviors. Many simply can’t handle the data scale. But, sampling down the data can reduce model accuracy.

But, Yottamine’s powerful cluster computing software architecture was designed for Big Data. It allows you to use all the data you can get your hands on – all the data points and all the features you want – and still produce accurate models very quickly, as this test shows.

In this benchmark, we built a 10-way classifier model with 8.1 million data points, each with 784 features. The source data set was the UCI MNIST8M data set, which is known to be nonlinear. Again, the competition was LIBSVM.

For LIBSVM, we selected the Gaussian kernel and used default C and Sigma and set the size of the kernel caching to 8GB. The compute resources were an Intel Xeon server with 4 cores and 16GB of RAM and Ubuntu 10.04.

For Yottamine we used the dense data set version, 43GB in size. The Yottamine software automatically selected optimum Sigma and C parameter values after testing 12 different combinations. The Yottamine compute resources comprised 20 nodes, each with 16 physical cores and 60.5GB of memory, connected by a 10 Gigabit network.

Results

LIBSVM attempted to evaluate a single parameter for 3 days before being terminated. Based on the work completed by the software during that time, it would have taken approximately 40 days to evaluate a single Sigma and C parameter pair. Because LIBSVM could not finish computing the model, the accuracy of the default parameters is indeterminable.

Yottamine evaluated 12 parameter sets in only 6 hours and produced a model with 97.82% accuracy.