Most conventional machine learning software is not very fast, especially with Big Data.

Even with data sets of relatively modest size, many data scientists find that getting an accurate predictive model takes forever. Each parameter test can take hours and it can take many parameter tests to get acceptable accuracy.

Yottamine is engineered for fast predictive modeling, exploiting every bit of compute power available to produce very accurate models very quickly, as shown in the speed test.

This benchmark was a binary classifier test using 581,021 examples with 54 features, applying a 70/30 split for training and testing data. Source data was the UCI data set called Covtype.

The test compared the performance of Yottamine machine learning algorithms against LIBSVM, one of the most popular machine learning programs in the world and used in many popular packages including Weka and others.

To get the best result from LIBSVM, we used the version for sparse data sets, as the program is more efficient with that type. We selected the Gaussian kernel and used the default C and Sigma parameters for the program. The software ran on an Intel Xeon server with 4 cores and 16GB of RAM running Ubuntu 10.04.

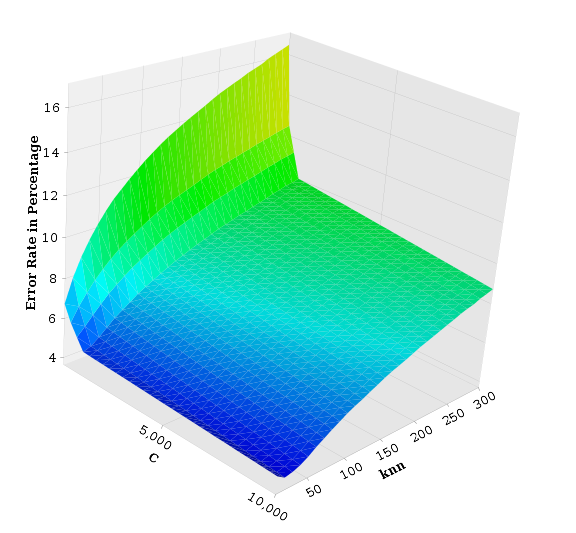

For Yottamine, we used the dense data set version because Yottamine is faster with dense data and we allowed Yottamine’s parametric automation to select the optimum parameters. The software ran on an AWS configuration comprising 10 nodes each with 8 cores and 7GB of memory on a 1 Gigabit LAN with all nodes running Ubuntu 10.04 server.

Results

LIBSVM evaluated 1 parameter in 6.3 hours with 77.1082% accuracy.

Yottamine evaluated 210 parameters in 1.5 hours with 95.75% accuracy.